Day 2 - Capture segment events

Today I only want to deploy some simple code to capture and store track() and identify() calls.

To keep it as simple as possible I will be using AWS lambda function and DynamoDB. I can probably get starting in less than an hour saving these raw events in a data store for analysis a little later.

🔗Setting up the project

This project will be using javascript and lambda. So I assume you know the basics around npm, git. Ensure you have node 10.19.0 (i use nvm for that), the project might work in other versions but just to be sure.

To simplify deployment and speed up the overall development we’ll be using serverless (which is awesome) and Amazon Web Services. Check out the getting started guide.

Log into your AWS account and find (or create) your access keys. Another guide for that. After that tell serverless to use them

All done. Let’s get started.

🔗Testing Serverless

Add this basic boilerplate to serverless.yml

And this very basic code to index.js

Now let’s try it

sls deploy

If all is good you will get some output with your endpoint:

After which you can try to hit that endpoint using a POST request

Voila! We have a working boilerplate for the project. I use httpie myself and not curl so would be:

Let’s keep going!

🔗The database — Dynamo

Honestly, I started with Postgres (using Prisma). It worked but was a lot harder to explain needing to model the database schema, set up the RDS instance and managing migrations.

For the sake of learning something new (have not done anything with DynamoDB yet), and keeping this guide simple i am going with AWS DynamoDB.

For day 1 we need 2 tables to store track() and identify() calls. Update your serverless.yml file to this:service: solving-marketing-attribution

https://github.com/digitalbase/solving-marketing-attribution/blob/feature/day-2-b/serverless.yml

Nothing special here: Create 2 tables, add some permissions and set some ENV variables to use later in the storing code.

Hit sls deploy and see serverless create the resources for you. Under the hood they are using cloudformation for you to document the resources you need in serverless.yml. It’s pretty awesome.

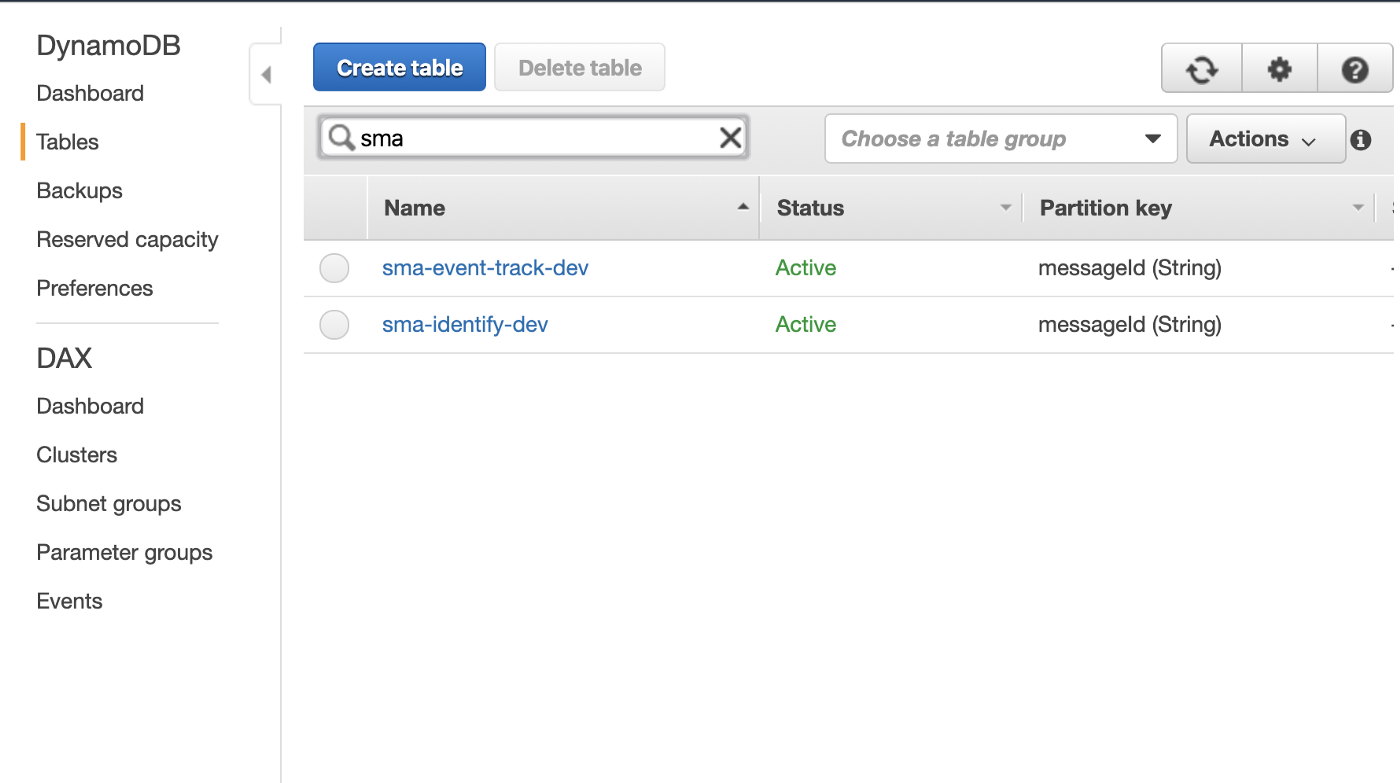

If you log in to your AWS account and go to DynamoDB you should now see something like this:

🔗Storing Events (using lambda)

Now the only thing we need to do is create a controller that accepts the body of a segment webhook and stores it into the newly created tables.

Hit deploy again. You can do it faster by only deploying the function so it will skip the Cloud Formation setup.

Mucho faster!

🔗Deploying and testing

To test if everything is working create some fake json files with identify() and track() calls. I grouped them in a folder /events

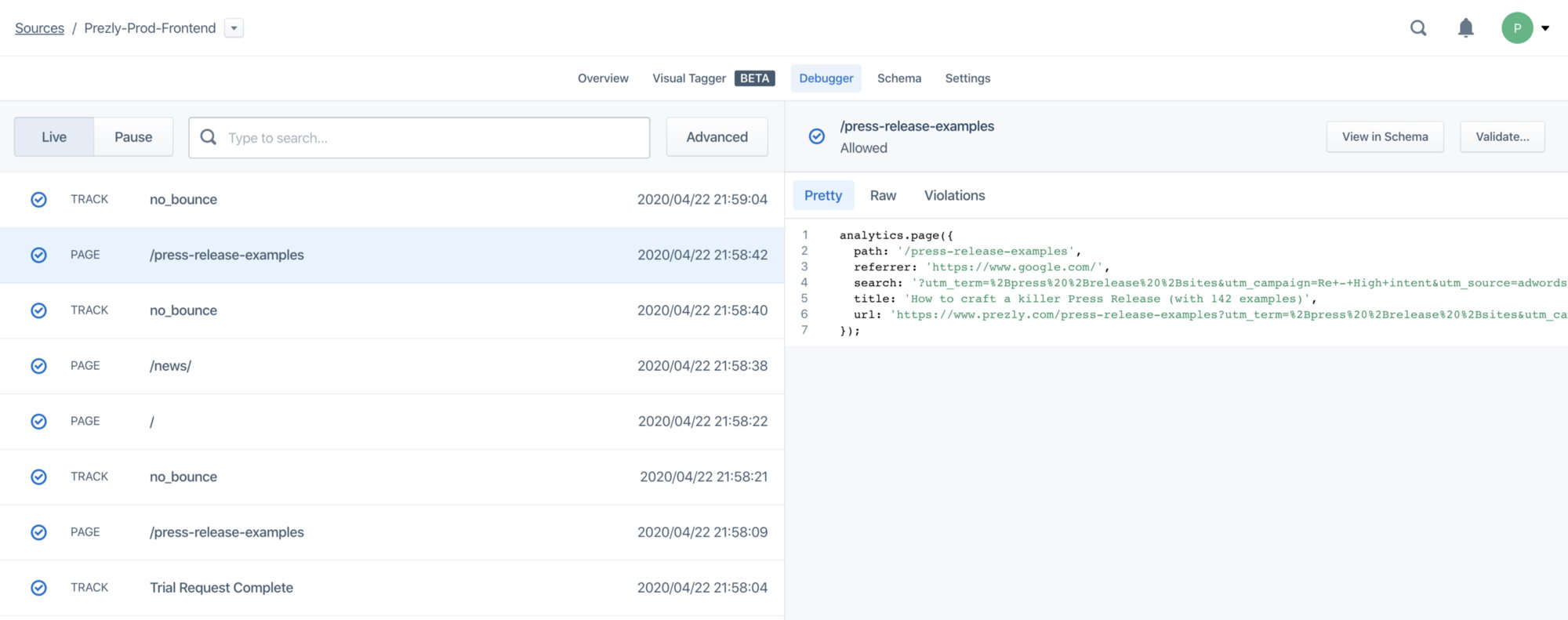

The easiest way to get to those is to log into your segment account, click a source and go to the debugger.

If you’re too lazy you can borrow some of mine(took out IP address and other sensitive info).

Now to test this using HTTPie use this:

It should play back the event for you like this:

And 200 status code means that it’s now stored in DynamoDB. Some more tricks.

I use a variable to store the endpoint host:

From now on I will use this shorter syntax.

If you want to do console.logs in your code and see them use the following command

The part after the pipe is fixing a bug with end lines and node 10. It’s a known bug.

See you tomorrow!

Other articles in the series

05/07/2021

Day 11 - Sales Attribution

03/07/2021

Day 10 - Six months later

03/06/2020

Day 9 - Dealing with tracking/ad blockers

18/05/2020

Day 8 - Feeding in sales data

06/05/2020

Day 7 - Reporting on visitor sources

01/05/2020

Day 6 - Feeding source attribution data back to Segment.com

27/04/2020

Day 5 - Feed old events

24/04/2020

Day 4 - Run in production + API

22/04/2020

Day 3 - Cleanup & Identify Visitor Source

21/04/2020

Day 2 - Capture segment events

20/04/2020

Day 1 - The Masterplan

19/04/2020

Solving marketing attribution (using segment)